In 2016 I worked with a non-profit, community-based security group in Cape Town, developing alternative technical solutions for license plate recognition. Their existing system transmitted video feeds over wireless links, directly into a central control room where all the LPR computation was done. The architecture had numerous problems, but the biggest was that the wireless signal was subject to interference, and regularly dropped off.

I built this proof of concept around the OpenALPR project, to demonstrate LPR running on a Raspberry Pi. We wanted to trial an alternative approach by running “at the edge”, which means deploying a low cost device to do the computation at each camera site.

Overview

IP cameras produce an RTSP video feed. This feed can be directly pulled by Motion, which is a highly configurable video motion detection daemon. It’s been in development for many years, and is quite well tuned for performance on low power devices. In this project, it’s configured to produce JPG images when the scene changes above a certain threshold.

These JPGs are stored in a tmpfs mount on the Raspi, and the first service to handle them is the watcher. It listens to filesystem events using a library called notify that wraps inotify and the equivalent on other OS platforms. Watcher puts the filenames into a beanstalkd queue, so that a pool of plate_detector workers can consume the jobs concurrently.

The detection of a plate in an image uses a lot of CPU power, which is why the plate_detector is horizontally scalable. If required, multiple Raspis could be used to handle a higher volume of image candidates. Plate detector runs the OpenALPR library using a CGo call to reach across the runtime barrier. If a plate is detected, the event metadata is pushed into a separate beanstalk queue for handling by the next phase.

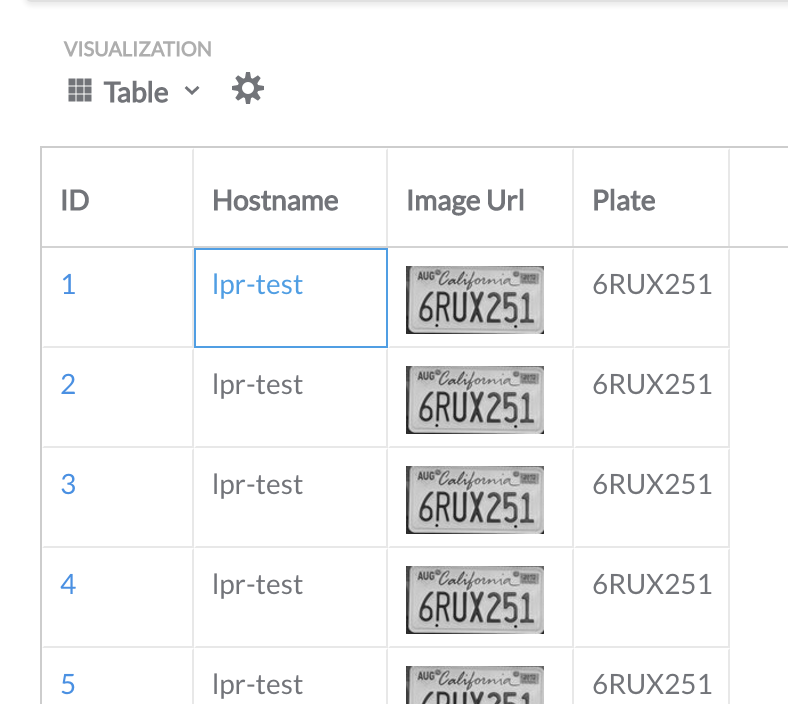

The uploader pulls events from the queue and is responsible for de-duplication, resize, crop, and upload to S3. The de-deduplication step is important, as there are normally many JPGs depicting the same single event of a vehicle passing the camera. For this, a local Postgres DB is used. The plate image is extracted using GraphicsMagick, which performs really well on the Raspi’s ARM CPU. The image is then cropped and the entire bundle is uploaded to an Amazon S3 bucket. The event metadata is saved to a Postgres DB running in the cloud, which acts as the central point for multiple cameras to connect to.

User interface

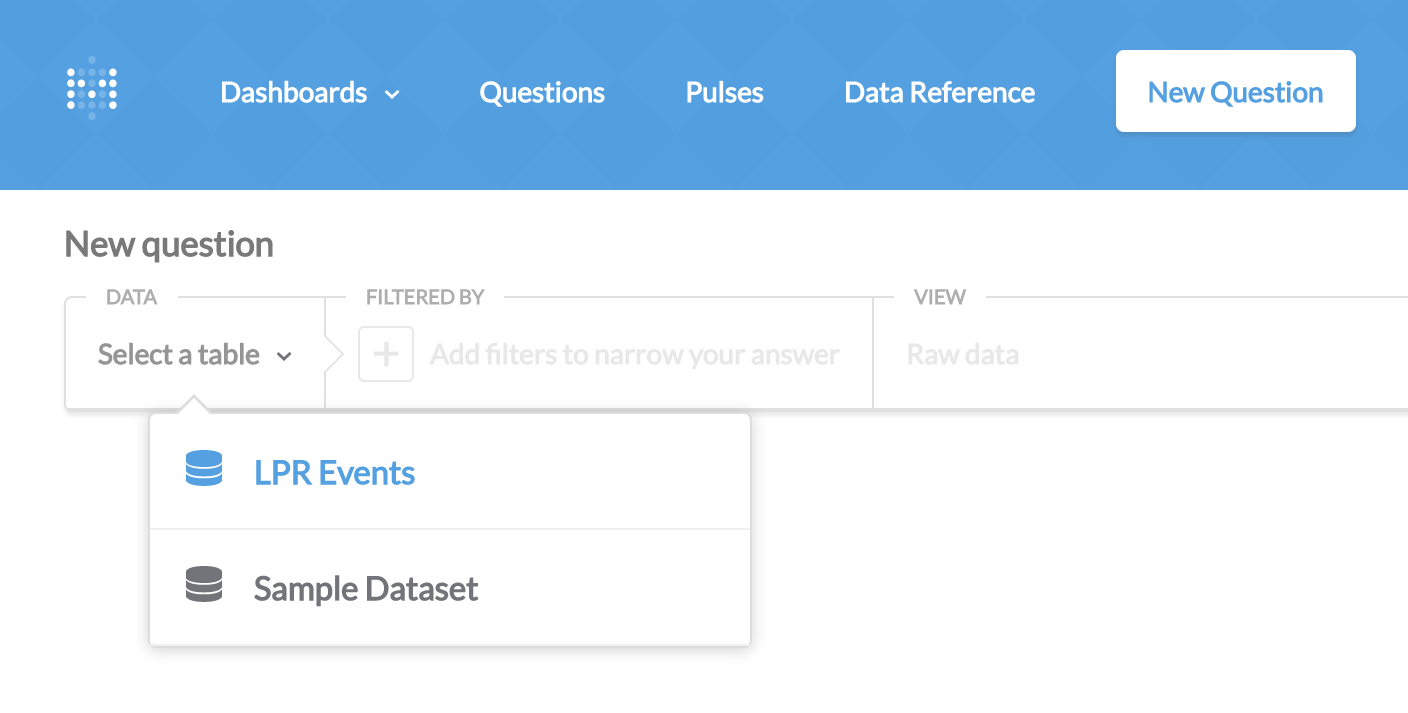

To search the database of events, Metabase is run on the cloud server, providing an easy to use method of filtering and searching the plate data using an intuitive “question” builder. I had to provide a small patch to Metabase to get it to display images from Amazon URLs, but now this functionality is baked into the main product.

Dependencies

One of the challenges of getting this all working was building dependencies for the ARM architecture. I didn’t have much luck with cross-compilation, and building on the Raspberry Pi was painfully slow. I found it easier to run an ARM cloud server in Scaleway and build the artifacts there in a Docker image of the target OS. The Hypriot blog was very helpful in this endeavour.

I created the following repositories and build artifacts for others to use. They build Leptonica, OpenCV, Tesseract, OpenALPR and Postgres:

Project conclusion

The proof of concept was successful, however a few external factors changed, which resulted in us not going ahead.

The first was that the City of Cape Town began rolling out a fibre network, and a service provider offered to connect all the cameras to a virtual private network, for free. This eliminated the wireless connectivity problems.

The second development was Hikvision’s release of ANPR running on the camera, around the same time that the PoC was developed. We felt that a smarter integration point would be to intercept the events coming from the camera’s onboard LPR, and upgrading to the new ANPR-enabled cameras would be a better investment, and easier to maintain than a custom solution.