This is the story of how we used cutting-edge artificial intelligence techniques to reduce and prevent crime, in an area plagued with constant incidents.

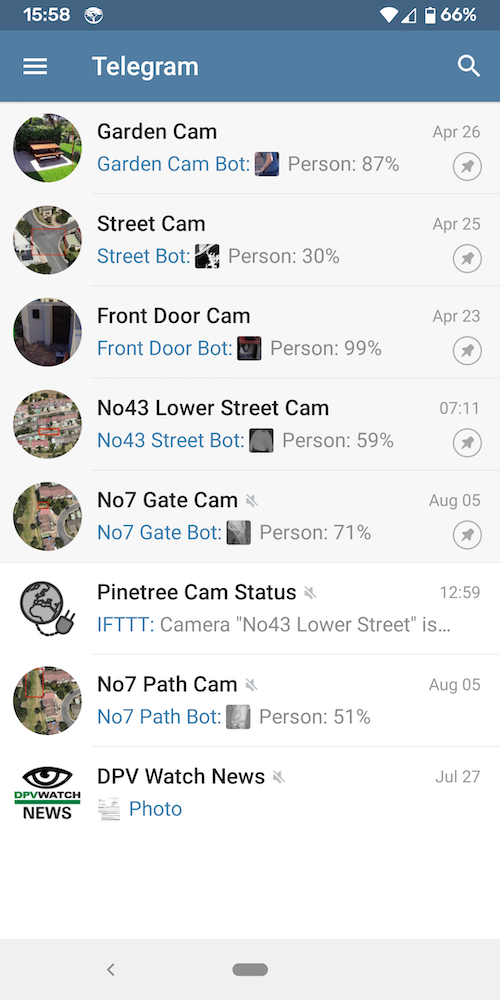

I created the WatchBot A.I. software to detect people in our live camera feeds, and alert to user’s phones with Telegram. The system has been operating in production in Cape Town since 2018, and in this time has been used to apprehend many suspects, both before and after actual crimes were committed.

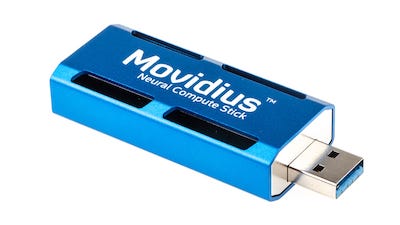

The application, written in Go, runs at the edge using a Raspberry Pi device. The A.I. model is executed on an Intel Movidius Neural Compute Stick.

Crime and privacy in South Africa

South Africa has one of the highest homicide rates in the world, roughly 36 per 100,000 people compared with an international average of 7. In the global rankings, this places the country in the top 10 most dangerous places. Cape Town was ranked as the 8th most violent city in the world in 2020.

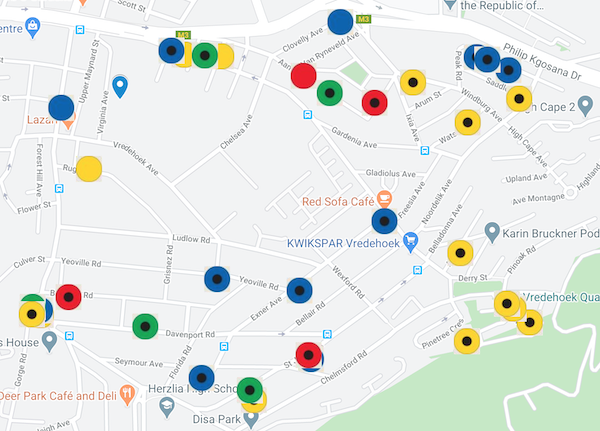

Here is a map of crimes reported to the local neighbourhood watch in the suburb of Vredehoek, Cape Town, for the single month of December 2019. There is nothing special about this month, this is average. Data is public on the website:

Each dot represents a crime incident, and the colours are the type of crime committed. More info is available on the site. As you can tell by the map zoom level, this is not a large area - it’s around 100 hectare, or 1 km2 (0.38 sq mi).

Living in a neighbourhood with this level of crime is an eye-opener. All around you, people are living in fear of the worst. The police and private security are overwhelmed and powerless to stop it. The responsibility for safety and protection falls to local community groups.

I’m deliberately going to great lengths to set the scene here, as a prelude to introducing the WatchBot project. This is no ordinary neighbourhood. Understanding what’s at stake, helps explain why we would enthusiastically support and fund a surveillance system.

Cameras

In response to this crime epidemic, our street came together to install cameras at key points in the crescent. These cameras are individually owned and operated, so the recorded footage is held on the respective camera’s DVR.

Here are two images of the kinds of suspects we wanted to stop, taken from the street cameras. With the camera recordings, we were able to review footage of suspects after crime was committed, and hand over footage to the police - but what if we could get ahead of them, and prevent the incidents from happening in the first place?

WatchBot

The application I created is called WatchBot. It pulls the live video feed directly over RTSP, the common streaming protocol used by H264 IP cams. Frames are decoded, processed and then fed into the Neural Compute stick running an A.I. model known as Single Shot Detection (SSD) MobileNet.

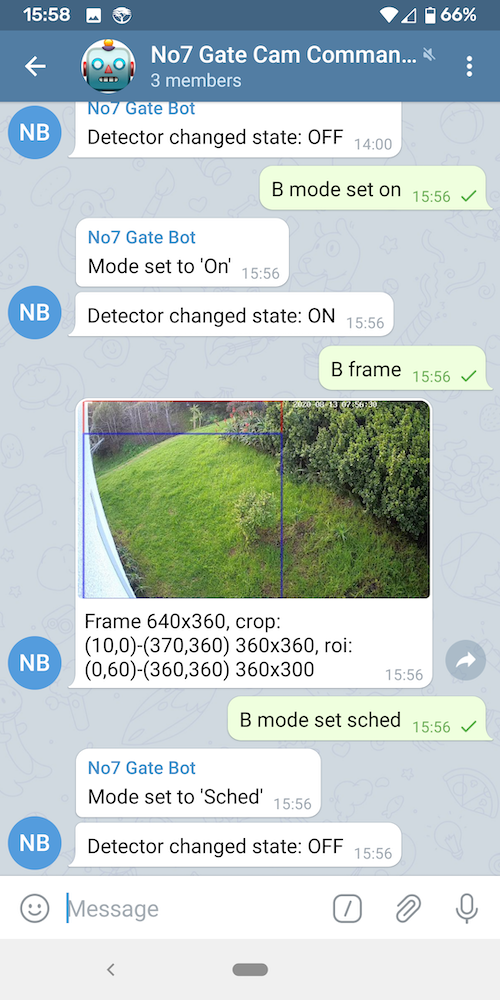

Once objects are identified, WatchBot filters the results looking for something with the label “person” appearing in a predefined region of interest. Below are two examples of what a region of interest looks like, overlaid on the camera scene, the red is the crop and the blue is the ROI:

If a person is detected, and the current time falls within the predefined alert schedule, WatchBot sends one image of the entire frame to a Telegram channel followed by close-up crops of 6 subsequent frames from the same event. Here’s an example of a real alert coming through Telegram - these suspects were apprehended with housebreaking tools in the middle of the night under one of our cameras:

The video below is footage of suspected thieves attempting to open resident’s car doors, as seen by the algorithm running the model. Think of it as a debugging view - it’s what the A.I. is picking up in real-time in the video scene. The objects and bounds are shown on the video feed in real time.

Here are some pictures of our neighbourhood installation - a cluster of Raspberry Pis running indoors at my house:

These 5 devices provide detection capacity for up to 20 cameras. Notice each one has a Neural Compute Stick plugged into its USB port. The feeds are pulled over the fibre internet connection from other resident’s houses. We subsequently moved the devices to their respective camera locations, which increased reliability as we were no longer relying on consumer grade fibre backbone with loads of contention.

I even setup a single device to run outdoors, at the edge in a waterproof housing with wireless connectivity:

Results

We’ve got 20 residents monitoring for alerts between 10pm - 6am every night via their phones. Several of our residents have 2-way radio communication with the police and private security companies. In addition, the central city security control room, known as WatchCom, has joined the Telegram channels to help dispatch the police as quickly as possible. They were already using Telegram for the city’s LPR (license plate recognition) alerts, so the interface is very natural.

The results have been excellent, and the watch wrote a general summary in cameras do cut crime.

User interface

There’s no graphical UI for WatchBot, instead you issue commands to a Telegram bot to adjust the schedule and settings. You can grab the current live frame from the video feed, or monitor uptime, all using a chat-like interface. Here are some images and a screencast of our live installation, in which I demonstrate some of the bot commands:

Features

Up to 4 cameras can be monitored by a single device

One Raspberry Pi + Neural Compute Stick can alert on up to 4 feeds. This is done using round-robin rotation and sharing the NCS.

Integration with external triggers

Detection can be activated and deactivated through Google PubSub integration. I configured my alarm system to toggle camera detection whenever I leave the house.

Send system journal errors to Telegram

The common error encountered is under-voltage, which happens when using a bad power supply on the Raspberry Pi. It’s useful to get an alert in Telegram if the device becomes unstable due to low voltage, so that the hardware can be replaced.

Automated restart if the frame rate drops

If the network link between the camera and WatchBot fails or stalls, the frame rate will drop. WatchBot can detect this scenario, and the best remediation is usually to restart the decoding pipeline which it does automatically.

Telegram rate limiting to prevent flooding

A token bucket is used to control the rate of Telegram messages posted to the channel, to prevent flooding and provide the optimal balance of alert images.

Health checks

The cameras are monitored using Uptime Robot, and heartbeats are sent from the device using Healthchecks.io. If a camera or device is unreachable, Telegram is alerted via and out-of-band channel using IFTTT.

Development

WatchBot is written in Go, with the following dependencies:

gstreamer

Decoding H.264 is an intensive task for a low powered device like the Raspberry Pi’s ARM CPU. WatchBot offloads the decoding onto the Raspberry Pi’s graphics card, via the OpenMax (OMX) library. The component that achieves this is called omxh264dec. Building a working pipeline was non-trivial and took a lot of trial and error. I spent many days and weeks reading the source and recompiling different versions of gstreamer in order to find a viable pipeline.

There are even separate configurations required for each camera manufacturer, as they use different encoding settings. Here’s an example of what a pipeline might look like:

rtspsrc

do-retransmission=false

latency=0

protocols=udp

drop-on-latency=true

location={url} !

decodebin !

videoconvert !

videorate drop-only=true !

video/x-raw,framerate=5/1 !

appsink max-buffers=1 drop=true

You’ll notice a lot of buffer/latency related keywords in there - minimal latency and buffering is a critical requirement of a real-time alert system.

OpenCV for image processing & GoCV for OpenCV’s Go language bindings

GoCV provides a neat wrapper around OpenCV’s video capture library, and importantly it allows you to pass a gstreamer pipeline as an input video source. WatchBot also uses OpenCV to convert the incoming raw video frames into the format expected by the A.I. model, prior to detection.

Intel Movidius Neural Compute Stick

Sadly, Intel has discontinued version 1 of the NCS. Originally I really enjoyed working with the device, and I found it to be very reliable and effective. The main friction I encountered was the total lack of support from Intel, they essentially released the hardware without sufficient investment in the SDK and toolsets. I joined many frustrated users in the forums and Github issue queues.

If I was going to continue working on the software, I would probably try Google’s Coral device which is similar in concept.

The go-ncs library was useful to get started interfacing with the NCS from Go, but I also had to write a lot of code to interface with the model (SSD-Mobilenet) and parse the results. See the file detect.go in the WatchBot source.

Conclusion

This has been a very satisfying project to work on, because it has such tangible real-world impact on my neighbours and the community. I’m fairly certain that the software has thwarted serious crime. Of course, it’s impossible to know what would have happened, had an alert not gone off and police not been dispatched - but that’s a good position to be in.

A.I. detection is coming to a camera near you - I expect that soon the major manufacturers like Axis and Hikvision will drop the prices of their A.I.-powered cameras to a reasonable level, and we will integrate with the system at a different layer - reading the alerts instead of doing the inference. This project will become obsolete, and that’s ok.